Abstract

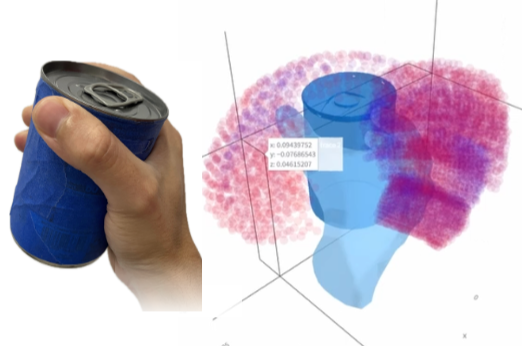

Designing user interfaces for handheld objects requires a deep understanding of how users naturally position their fingers. However, finger placement varies significantly based on individual hand anatomy and the specific object being held. In this paper, we introduce GraspR, a computational model that predicts user preferences for single-finger microgestures in GraspUIs. By leveraging a dataset of user-elicited finger placements across various objects and grasp types, GraspR can accurately suggest the most ergonomic and intuitive locations for UI elements. We demonstrate how GraspR can be used to create adaptive interface layouts that automatically adjust to the user's current grasp, maximizing interaction efficiency and comfort. Our technical evaluation shows that GraspR outperforms baseline models in predicting preferred interaction areas, paving the way for more personalized and accessible gestural interfaces on everyday objects.